If you don’t know yet, Halide is a popular camera app for iPhone that has been available in the App Store for years. It’s developers today explained in a blog post how the depth-sensing features on the iPhone SE’s camera work.

The iPhone SE 2020 is Apple’s latest low-cost iteration of the iPhone. It features advanced internals while keeping the same design as the iPhone 8. Further, the camera module in the new SE is interchangeable with the iPhone 8, too, as revealed in iFixit’s recent teardown of the device.

Now, despite having the same camera module as the iPhone 8, the iPhone SE 2020 boasts additional depth-sensing capabilities that were absent in the iPhone 8 owing to software improvements on-device.

Halide developers point out precisely what those software improvements are. According to Halide, the second-gen iPhone SE “goes where no iPhone has gone before” by featuring “Single Image Monocular Depth Estimation.” This means the camera can generate a portrait effect using only a 2D image. And the iPhone SE does that by generating depth solely from machine learning.

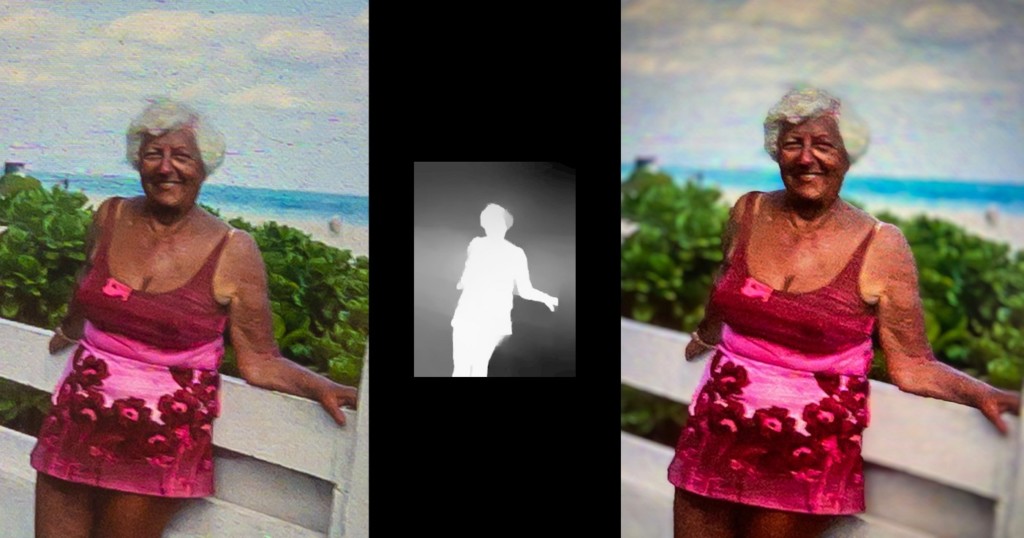

You can test it by snapping a picture of a picture using Halide itself. In the article, the developer showcases the mechanism by clicking a picture of a 50-year old slide of his grandmother and the iPhone doesn’t fail to convert it into a portrait image.

The way this works is that the new iPhone SE uses machine learning to generate stunning depth maps. These are much better as compared to the iPhone XR which also has a single camera but is dependent on hardware to some extent to produce a portrait effect.

The neural network that powers the iPhone SE 2020’s camera is quite advanced as it’s very good in masking different parts in an image—an important step while determining depth. However, a limitation that it unfortunately has is that it can only properly produce portrait shots of humans. Anything else and it gets confused between the background and the foreground.

iPhone SE 2020’s machine learning algorithms for the camera also fail to successfully determine distances. Further, the article demonstrates the camera’s ability to snap better dog photos than plant photos suggesting that the machine learning algorithm might not have been fed enough of them.

Lastly, the portrait effect in the iPhone SE while capturing humans stems from an API that Apple made available for developers with the launch of the iPhone XR dubbed “Portrait Effects Matte.” What it does is it offers a higher resolution foreground layer than the background allowing the iPhone to better distinguish between the former and the latter; thereby producing a convincing portrait effect.

Notably, so, all of this image processing is possible owing to Apple’s stunning A13 Bionic chip that’s also present in the latest flagship iPhones including the iPhone 11 and the iPhone 11 Pro.

If you want more insights on how the iPhone SE 2020’s camera works, you should head over to Halide’s original blog post. It’s a good read.

Furthermore, what do you think about the new underlying camera features in the second-gen iPhone SE? Let me know in the comments below.